Tesla owner almost crashes into a moving train in “autonomous driving” mode (4 photos + 1 video)

Category: Electric cars, PEGI 0+

22 May 2024

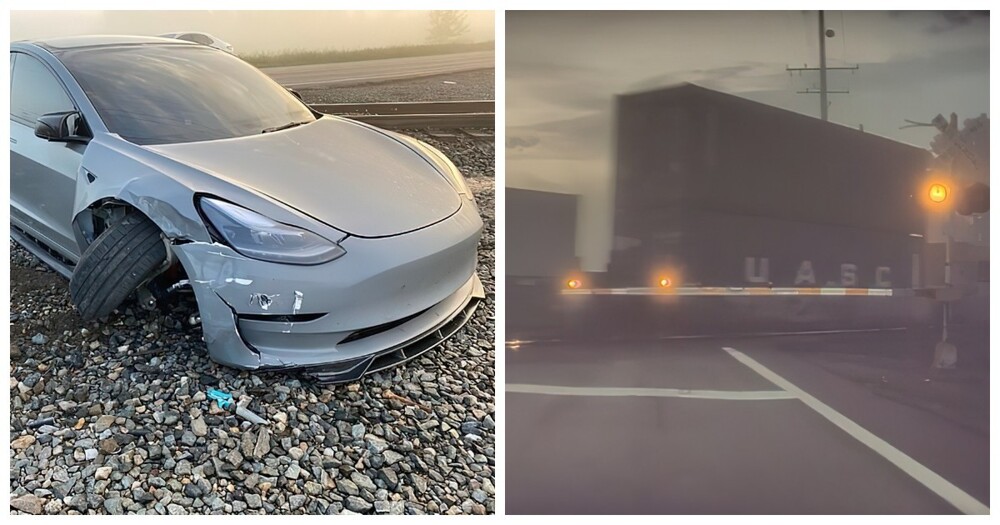

The Tesla owner blames the Full Self Driving (FSD) mode - driving without steering wheel control along a given route - for the fact that his car was driving towards the railway tracks along which the train was walking at that moment. The driver managed to correct the situation at the last moment. And he says that this is not the first time he has encountered such “quirks” of FSD.

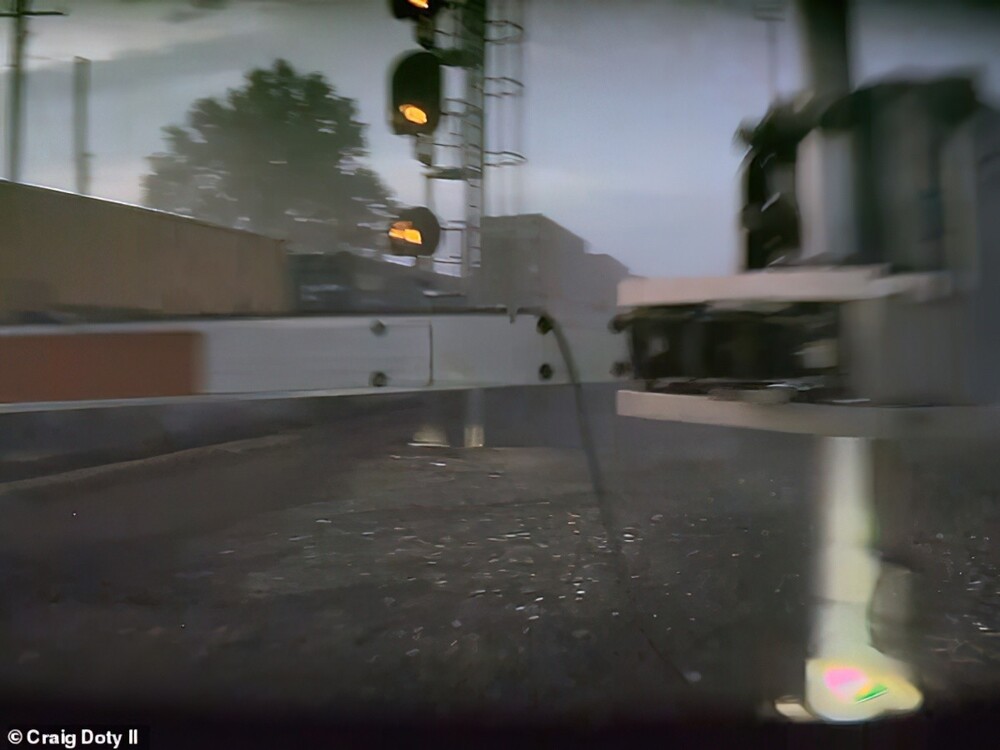

The dash cam footage shows the car in the dark and fog quickly approaching a passing train with no signs of slowing down.

Craig Doty II of Ohio claims his car was in full self-driving (FSD) mode at the time and did not slow down despite a train crossing the road, but did not specify the make or model of the car.

The driver managed to react and steer to the right, knocking down the barrier at the railway crossing and stopping a few meters from the moving train.

Tesla has faced numerous lawsuits alleging that its FSD or Autopilot feature caused crashes by either failing to stop for another vehicle or veering into an object, in some cases killing drivers.

A car after a collision with a barrier. Thank you for not taking the train

According to the US National Highway Traffic Safety Administration (NHTSA), as of April 2024, Tesla Autopilot systems on Models Y, X, S and 3 have been involved in 17 fatalities and 736 crashes since 2019.

Craig Doty II reported the problem on the Tesla Motors Club website, saying he has owned the car for less than a year, but "in the last six months it has attempted to drive directly into a passing train twice while in FSD mode."

Doty said he tried to report the incident and find similar cases, but couldn't find a lawyer who would take his case because he didn't suffer significant injuries — just a sore back and a bruise.

Tesla cautions drivers against using the FSD system in poor lighting or poor weather conditions such as rain, snow, direct sunlight and fog, which can "significantly impair performance."

This is because poor visibility interferes with Tesla's sensors, which include ultrasonic sensors that use high-frequency sound waves to bounce off nearby objects. Also used are radar systems, which emit low-frequency light to determine if there is a vehicle nearby, and 360-degree cameras. All of these systems collect data about the surrounding area, including road conditions, traffic levels and nearby objects, but in poor visibility conditions they cannot accurately determine the surrounding environment.

When asked why he continued to use the FSD system after the first close encounter, Doty said he believed it was working properly because he had not had any other problems for some time.

“Once you've used the FSD system without problems for a while, you start to trust it to work properly, just like adaptive cruise control,” he said. - You assume the car will slow down as it approaches the car in front, until it suddenly does and you suddenly have to take control. This kind of overconfidence can develop over time because the system usually works as it should, so failures like this are particularly worrisome.”

Tesla's manual warns drivers not to blindly trust FSD alone. You must keep your hands on the steering wheel at all times, "be aware of road conditions and surrounding traffic, pay attention to pedestrians and cyclists, and always be ready to take immediate action."

Tesla's Autopilot system is blamed for causing a horrific crash that killed a Colorado man driving home from a golf course, according to a lawsuit filed May 3. Hans von Ohain's family claims he was using the Autopilot system of a 2021 Tesla Model 3 on May 16, 2022, when the car veered sharply to the right off the road, but Eric Rossiter, who was a passenger, says the driver was highly intoxicated at the time of the crash intoxication. Ohain tried to regain control of the car but was unable to and was killed when the car collided with a tree and burst into flames. An autopsy later showed that at the time of death, the legal limit for alcohol in his body was three times higher.

In 2019, a Florida man also died when the Tesla Model 3's Autopilot failed to brake in time. As a result, the car fell under the trailer and the driver died instantly.

Last October, Tesla won its first lawsuit over allegations that its Autopilot feature led to the death of a Los Angeles man when a Model 3 veered off a highway and crashed into a palm tree before bursting into flames.

The Highway Traffic Safety Administration (NHTSA) has investigated a number of crashes involving Autopilot and said "weak driver engagement systems" contributed to the crashes. The feature "resulted in predictable misuse and avoidable crashes," the NHTSA report said, adding that the system did not "sufficiently ensure driver attention and proper use."

In December, Tesla released a software update to two million vehicles in the US that was supposed to improve the performance of the Autopilot and FSD systems, but now, in light of new crashes, NHTSA has suggested that the update was not enough.

Elon Musk has not commented on the NHTSA report, but he has previously claimed that people who use the Autopilot feature are, on average, 10 times less likely to get into accidents.

“People are dying because they mistrusted Tesla's Autopilot capabilities. Even simple steps can improve safety, Philip Koopman, an auto safety researcher and professor of computer engineering at Carnegie Mellon University, told CNBC. Tesla could automatically limit Autopilot to designated roads based on mapping data already in the car. Tesla could also improve monitoring to prevent drivers from regularly immersing themselves in their cell phones while Autopilot is running.”